I teach college courses and I spend a lot of time on Twitter, which means in the last few months I witnessed many different articles and discussions examining the educational implications of OpenAI’s ChatGPT3 dialog bot. For anyone who is unfamiliar, the most recent ChatGPT interface has been praised, ominously, in mainstream media for the realism and detail of its responses to a wide range of prompts. It is no surprise that this technology has also caused a panic, especially among college and high school educators who worry their students will use the tool to cheat. While I think persuasive, effective, and flexible AI-generated text carries many serious concerns, I am not not worried about my students using ChatGPT to complete assignments. After spending some time with the software, count me among the faculty members who, like John Warner, consider ChatGPT, “a bullshitter”.

I teach liberal arts courses about music whose assignments are almost always expository and based on existing media (readings, music, etc.), and I don’t see ChatGPT as a cheat code for my classes. One reason is that AI simply can’t do the unique work I ask of my students, which involves writing, analyzing listening examples, and even creating music in class. But, more generally, I simply don’t believe ChatGPT can pull off the kind of critical thinking I expect, and I’m willing to put this belief to the test and share this experiment with all of you.

This post is the first in a series that will put my own teaching on the line by grading ChatGPT’s performance with real questions I ask my students.

Throughout the coming semester, I will treat ChatGPT like one of the students in my ‘Music & Meaning In Our Lives’ course at the University of Michigan. I will ask the AI to respond to the same questions and media examples that I present to my class. Although I will give ChatGPT a grade at the end of each post in this series, I admit this is a gimmick. My human students do much, much more work than what I can ask ChatGPT to accomplish, as many of the course activities fall far beyond this software’s functionality. Nevertheless, I anticipate a valuable and reflective experience, as the AI-generated responses should inspire me to consider this course’s content and structure in new ways.

Subscribe and follow along for my updates between now and April!

ChatGPT tells me the answer to “What Is Music?”

Based on my experiments with ChatGPT prior to this term, I expect the software will struggle with many of this course’s concepts and content. As I have written in the past, ‘Music & Meaning In Our Lives’ is expansive in its exploration of the process through which musical materials and cultural contexts interact to generate meaning through our individual listening experience. The course engages with an incredible range of topics and media in order to facilitate my student’s self-actualization as listeners. ChatGPT can’t listen to music, though other AI/machine learning programs can.

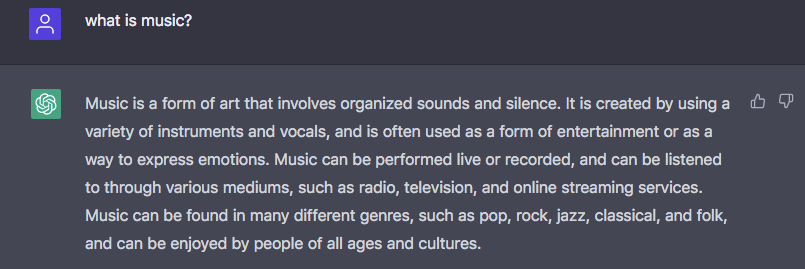

Yet, the material at the beginning of the semester is general. One of the initial questions I give my students is, “what is music?”, and this is the first prompt I will give:

This is an acceptable but not exceptional answer. I was happy to see ChatGPT include ‘silence’ in its response, as I often have to remind my students that silence is an expressive component of music (uncharacteristically [as I don’t use a lot of classical music in this class] I use the first minute or so of this Beethoven piano sonata movement to do so). Unfortunately, the rest of this response is pretty generic, so I prompt it to expand on its response:

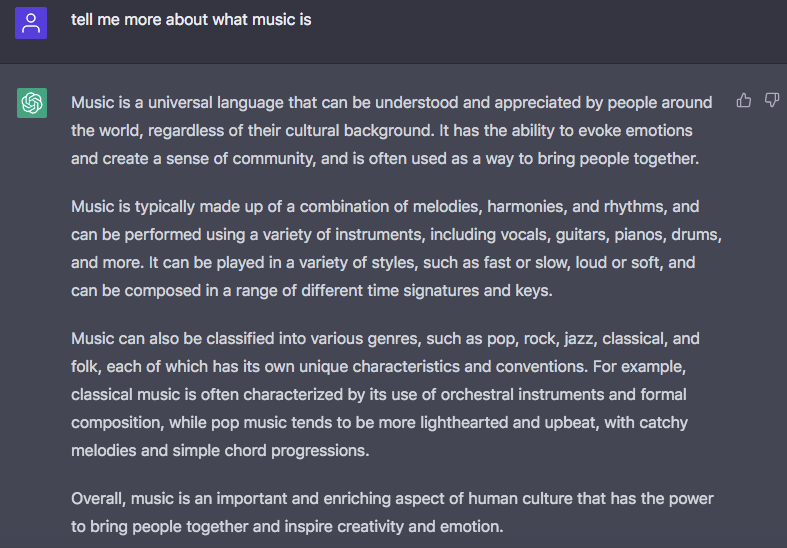

Superficially, this is an impressively detailed extension of the program’s earlier work, but there are several problems that I would push back on it, were a student to present the same content in class or an assignment. First of all, the aphorism “music is a universal language” is a cliché. Although music is a cultural practice common to a huge array of human populations over a staggeringly long period of history, it is not a universal language.

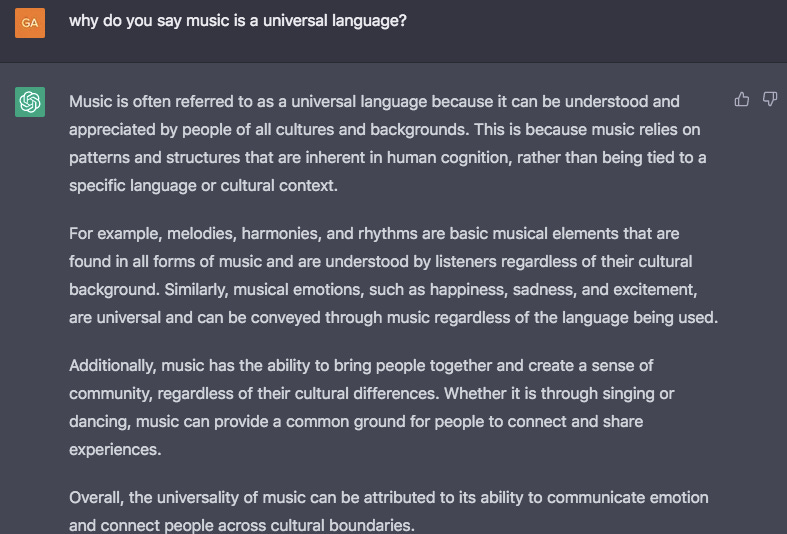

So, I ask ChatGPT to explain why it used that terminology:

This response is also detailed, but flawed in a multiple meaningful ways. Despite the verisimilitude of the AI’s writing, its argumentation here is weak because it uses generic platitudes instead of specific evidence to make its point. The strongest element of what ChaptGPT proffers is the notion that music can connect people and serve as the fulcrum for the formation of new communities that transcend established cultural differences. But, the idea that the meaning of musical materials is fixed and not inflected by cultural contexts is demonstrably incorrect.

In fact, this is one of the central themes of this course, and one of its central underlying theories is the dialogous interaction between the internal stimuli of the piece of music’s content and the external lived experience we bring to our listening. Another key idea we return to throughout the semester is the so-called ‘Triangle Of Music Meaning’, which argues different people can respond the same piece of music in different ways, often depending on their role (i.e. ‘creator’, ‘interpreter’, ‘listener’) relative to the musical work (we also discuss how a single listener can hold multiple perspectives). Armed with these ideas, I teach my students that musical meaning is pluralistic, personal, and sometimes ephemeral: not permanent and universal.

After engaging ChatGPT with these questions, I think the tone of the software’s responses, namely how confident and self-assured its writing appears to be, must play a significant role in the fear some people hold about its potential impact on teaching. There is no equivocation in ChaptGPT’s answers, which helps them seem persuasive. But, if you read closely, it makes mistakes, and these errors are different than those I see from students.

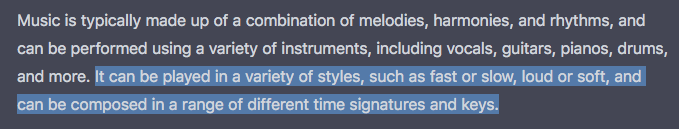

For this round of prompts, the most obvious error is this (from the follow-up to ‘what is music?’):

‘Fast’, ‘slow’, and ‘loud’ are not styles of music, and I don’t know any person who might argue this, at least in their first language (to me, this sentence reads like a mis-translation). I have played around with ChatGPT prior to this post, and I have noticed many moments in the writing, like this one, that give away the reality that an AI program has created the text. Often, these errors require subject matter expertise to identity, but this particular conflation is glaring.

Another, more concerning, takeaway I have from this back-and-forth with ChatGPT is the presence of certain biases in its responses. For example, the way this line from its last response invokes ‘human cognition’ subtly advocates for the superiority of STEM disciplines over the humanities.

The context here is also worrisome because this apparent connection to science validates its argument that music is universal: other analytical frameworks are portrayed as unreliable.

Another instance of bias comes from ChatGPT’s answer to my second prompt, the expanded definition of music, and advances the flawed idea that popular music is necessarily simpler and less serious than classical music.

These arguments are not plainly erroneous like other mistakes ChatGPT makes in here, but, were a student to advance the same ideas, I would challenge them to explain these positions with the hopes of illustrating why they are problematic (if I had more time, I would do the same with ChatGPT!). After beginning this experiment, what concerns me as a teacher, music scholar, and citizen is not that members of my classroom will use this technology to cheat, but that the AI-generated text they will encounter will similarly perpetuate such biases and launder them through its authoritative tone.

Final Grade: C

The subject matter for this session with ChatGPT was very general, and the program delivered a passable, but mediocre, performance. My next set of questions will be much more specific, so I am very curious to see what ChatGPT comes up with. Be sure to subscribe to follow along!

This is great and I am looking forward to the whole series. What are you including in your syllabus about this technology? Here's my note, to start some discussion in the comments:

--------------

A note on AI-driven writing support.

Recently, open-source AI tools such as ChatGPT and others have been released to the general public. These systems have tremendous potential for revolutionizing how humans communicate with one another, and for generic communication, they can generate written responses that are quite similar to what a human can create.

You are not a generic human. You are a Spartan whose lived experience, opinions, and research has value, both to me and to the world. Written papers are an opportunity to share who you are, and no artificial intelligence, regardless of how developed they become, can replace what you have to offer. As a result, when I assign written work, I expect you to write. Attempting to substitute your writing and your personality amounts to an act of theft – it is both plagiarism, and furthermore, you would be robbing yourself of an expressive opportunity. In most cases, use of these types of tools will result in a failing grade for the assignment, and potentially failure for the entire course.